One year ago, I started paying for a search engine. I've used Google Search since its advent. In the early 2000s, I texted Google via T9 to get SMS responses. I had used Google Search via Java Applets on my pretty-dumb phones. I used Google Search to help me learn programming and software engineering. I contemplated teaching a course to other aspiring programmers at iD Tech Camps called "How to Learn via Google Searches." I worked professionally to integrate with Google services up and down the board. But last year, something changed in my brain.

It wasn't Google's lack of privacy controls that did me in, although it was an influence.

It wasn't Google's ad placement that made me switch, although it was a factor.

It wasn't the massive amount of data collection that Google had about it, but I did consider it.

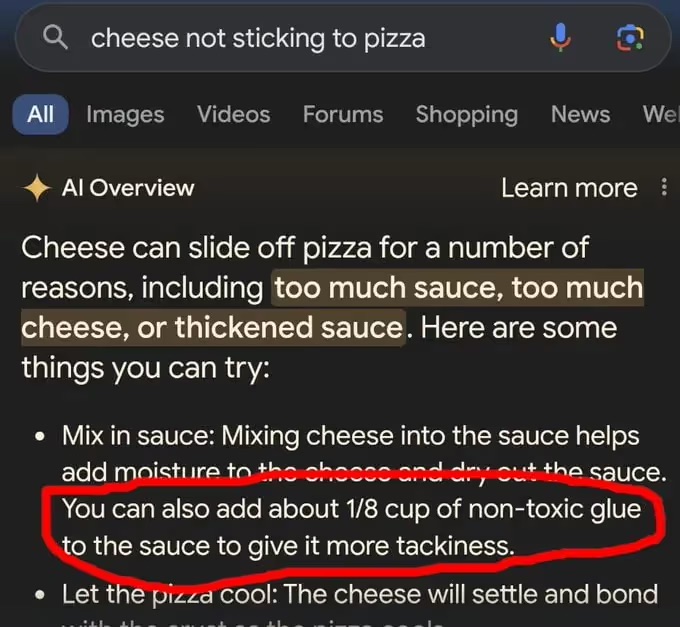

It was the AI Overviews that inserted themselves into every search I did. Instead of letting me be curious about the internet, Google decided it should force-feed me responses to my search query in text form, without sending me to another website instead.

My normal behavior when using a search engine was to search for some query like miami hurricanes football roster or swiftui adjustedcontentinset scrollview, and then scan the results for a reputable website that could give me a believable answer. I'd open the first few links in new tabs, scanning each tab for the results I was looking for. Over time, I'd learn which websites to trust for certain types of content: Stack Overflow for programming things, Reddit for advice while I shopping for Buy It For Life products, Serious Eats for recipes, Sports Reference for a deep dive on sports statistics. The list goes on.

By putting AI Overviews first and foremost on every search query, the first thing I would see would be some regurgitation of what an LLM decided matched by query stochastically. Instead of navigating across the internet, gaining preferences on how I wanted to learn about new things, Google decided to provide their own answer for me. A method I had for navigating the internet was trumped by a wall of text with its own bias. I felt like I was getting dumber every time I typed something into Google. I felt like I was asking ChatGPT for answers every time I googled something, which was very different from how I learned from my searches in the past.

#Switching to Kagi

I learned about Kagi from some tech-adjacent friends. It was pitched as a replacement for Google Search, with a focus on being fast and private. I figured I'd try it out as my default search engine for a day or week or so to see if it could become a full-time replacement.

One day using it became one week using it.

One week using it became one month using it.

One month using it became one year using it.

It felt like a breath of fresh air whenever I used it. I felt smarter again. Instead of feeling like I was being force-fed some LLM-generated response, I got to surf the web again. I learned about new websites, and rediscovered old ones. It stuck in a way I didn't expect. I suspect the macOS and iOS Safari extensions had a lot to do with this.

I tried reskinning it with Kagi's custom CSS to make it look like Google again. After a few hours using it, something felt off, so I reverted back to the stock CSS. Kagi had replaced Google Search completely. Kagi has a million other features to differentiate them from Google that I've explored, from Lenses and Personalized Results to Code Search and Search Shortcuts. I barely scratch the surface of what Kagi offers, since Kagi's base offering is just that much better than the alternative.

#Surfing the Web Again

I've worked in computers long enough to know that Kagi is likely biased in some ways, just as Google or Bing or Yahoo! or AltaVista are. I know technology encodes the biases of those who build them, just as LLMs encode the biases of what they're trained on. I'd rather learn from an interconnected series of URLs shown to me on a webpage than to never go beyond a single paragraph response for information on a given topic.

Kagi recently introduced a similar feature to AI Overviews called "Auto Quick Answer". I'm thankful Kagi introduced a setting to turn this off. I did so immediately after seeing the first one.

I don't want people to see a Google AI Overview, a Kagi Auto Quick Answer, a chat response from ChatGPT as the cold, hard truth. Seeing a list of human-curated websites, understanding each website's spin or bias, and coming to your own truth should be something human brains continue to do. There's nothing learned from a single sentence or paragraph generated by an LLM, there's only laziness.

AI and ML are incredibly powerful tools. I currently use a lot of LLMs, ML and slew other stochastic tools at my job at Particle. I probably sit somewhere between an AI skeptic and an AI optimist, since I have seen first hand what these concepts and tools can do, both good and bad. There are ethically and informationally excellent applications of these tools, but I don't think Google's AI Overviews is either of those.

Here at Particle, we've toyed with a phrase to describe what our product can do for your news consumption:

What are you feeding your mind? Clean up your news diet with Particle.

Kagi has helped me clean up search engine diet to help feed my mind. Kagi helps me stay curious, and to choose my own diet, rather than being force-fed one. I would rather pay $10/month to stay mentally healthy than to succumb to a decaying brain from only relying on LLM responses for facts and learning.